Table of Contents

1. Data Warehouse - Analysis and Design

1.1. Introduction to Data Warehouse

1.2. Data Warehouse Rationale

1.3. Data Warehouse Design Approach

1.4. Data Warehouse Design Methodology

1.5. Schema Types

1.6. Star Schema Design

1.7. Data Dictionary

1.8. Granularity of Data

1.9. ETL Script

1.10. Decisions of Data Maintenance and Slowly Changing Dimensions

1.11. Data Warehouse Interface Suggestions

2. Forward Engineering of Star Schema Using QSEE

2.1. Star Schema Set Up

2.2. Data Gathering

References

1. Data Warehouse - Analysis and Design

On the basis of

given scenario, it is found that Leeds City Council is interested in the

benefits they could get from the data they have available. Since Leeds City

Council is responsible for the provision of public transport in the regions

along with ensuring the quality of the roads, lighting and services, they have

asked me to represent data warehousing on the project which would help them to

improve the use of public transport in the region and promote ‘healthy and

environmentally friendly’ initiatives such as ‘cycle to work’ schemes. Thus, my

task is to create a document that describes and outlines major key factors for

their data warehouse design. So, let’s begin the document with introduction to

data warehouse.

1.1. Introduction to Data Warehouse

A Data Warehouse simply is a database designed to

enable business intelligence activities which helps users to understand and

enhance organization’s performance as it is designed for query analysis rather

than for transaction processing, and usually contains historical data derived

from transaction data but can include data from multiple heterogeneous sources (Docs.oracle.com,

2016).

According to W.H. Inmon (1992), “A data warehouse is a

subject-oriented, integrated, time-variant, and non-volatile collection of data

in support of management’s decision-making process”. Thus, the characteristics

to be a data warehouse according to Inmon are discussed below:

a.

Subject-oriented: Here, subject oriented means organized around major

subjects, like customer, sales, etc. It provides a simple and concise view

around particular subject issues by excluding data that are not useful in the

decision support process (Bellachia, 2016).

b.

Integrated: Integrated means, removing naming conflicts and

inconsistencies among units of measure, while constructing data warehouse by

integrating multiple, heterogeneous data sources like relational database, flat

files, online transaction records, etc.

c.

Time-variant: Here, Time-variant refers to the time horizon of the

data warehouse which is significantly longer than that of operational systems.

It means that the data warehouse contains historical data which assists to

discover trends and identify hidden patterns and relationships in business.

d.

Nonvolatile: Nonvolatile means the data are stored in read only

format in data warehouse i.e. once entered into data warehouse, data should not

change.

Other key characteristics of Data warehouse are

i.

Data is structured

for simplicity of access and high-speed query performance.

ii.

End users are

time-sensitive and desire speed-of-thought response times.

iii.

Large amount of

historical data are used.

iv.

Queries often

retrieve large amounts of data, perhaps many thousands of rows.

v.

Both predefined

and ad hoc queries are common.

vi.

The data load

involves multiple sources and transformations.

Some of the key benefits of implementing data

warehousing mechanism in Leeds City Council are discussed below

i.

Better Decision

Making: Data warehouse stores

credible facts and statistics which will be useful on making better business

decisions.

ii.

Quick and easy

access to data: Business users

will be able to access data from multiple sources which allows them to make

quick and accurate decisions with no or little support from an IT.

iii.

Data quality and

consistency: Since data warehouse

gather information from different sources and store it into a single, widely

used format, departments will produce outcomes that are accurate and consistent

with each other.

1.2.

Data

Warehouse Rationale

In a world of business today, fast response and quick

decision making are key to success (Lapluea, 2016). This is where data

warehouse comes in need. Data warehouse consists of both, current as well as

historical data that are useful for quick and best decision makings. At Leeds

City Council, there is huge amount of unmanaged random data (current as well as

historical) about the transport systems, condition, types of vehicles, quality

of roads, lighting and services, etc. Thus, Leeds City Council is in need of

such system that allows them to analyze the past road conditions and services,

and make future decisions to improve the use of public transport and promote

‘healthy and environmentally friendly’ initiatives.

“A data warehouse by itself does not create value but

value comes from the use of the data in the warehouse.” (Parzinger and Frolick, 2001).

Developing a data warehouse is not an easy task but it

is beneficial as it helps in crucial decision making. It also allows users to

perform extensive analysis. Beside this, data into the warehouse are integrated

(changes and updates are not allowed) but can be shared across the business

units. Making a data warehouse will centralize and standardize the data, and

will save current as well as the data from past. And to make future decisions

on providing the good quality public transport, good roads, lighting and

services, Leeds City Council need to analyze the data from past and know the

changes, challenges, opportunities, etc. during this long period of time. And

the data required for analysis and decision making process is obtained by

making data warehouse. Thus, the Leeds City Council will be benefitted by

making data warehouse for analyzing and decision making purpose which will

assist to improve the use of public transport in the region and promote

‘healthy and environmentally friendly’ initiatives.

1.3. Data Warehouse Design Approach

When designing a data warehouse solution, there are

two major approaches: Third Normal Form or Top-Down Data Warehouse design

approach also known as “Inmon” for its creator William Inmon and other is

Dimensional or Bottom – Up Data Warehouse design approach also known as

“Kimball” after Ralph Kimball. A third approach known as “Data Vault”,

developed by Dan Lindstedt has also seen an increase in usage since its

unveiling in the early 2000’s and serves as a bit of a hybrid between the two (MacLauchlan,

2013). On the basis of requirements of any project one of the approaches can be

selected. Further discussion on these approaches is as follows

a.

Bill Inmon –

Top-Down Data Warehouse Design Approach (Third Normal Form)

This

methodology is based on top-down approach which was created by Bill Inmon also

known as “Father of data warehousing”. Here, the data warehouse is typically

built to be enterprise wide and maintenance is relatively simple because 3NF is

easily human readable. It requires hefty ETL to integrate the source data into

the 3NF format, and again to convert the warehouse to a dimensional model for

the data marts.

b.

Ralph Kimball -

Bottom-up Data Warehouse Design Approach (Dimensional)

Kimball,

also known as Bottom up Data warehouse design approach is one of the best

design approach where dimensional data marts are first created to provide

reporting and analytical capabilities for specific business areas. Here, data

marts are integrated to create a data warehouse using a bus architecture and

consists of conformed dimensions between all the data marts. In this approach

data warehouse is broken down into a number of logically self-contained and

consistent data marts, rather than a big and complex centralized data

warehouse.

c.

Data Vault

Among

different data modeling approach, Data vault is designed to provide long-term

historical data from multiple sources. In this model, every data is stored and

every relationship is defined. Here, expanding an entity is quick and painless

by the use of satellite records. Though optimal for data warehousing, feeding

data marts out of the Data Vault can be a bit more complicated than the Kimball

method (MacLauchlan, 2013). This is a hybrid solutions of Bill Inmon’s Top down

model (3NF) and Kimball’s bottom up approach (Star Schema), and Dan Linstedt,

the creator of the method, describes the resulting database as follows:

“The Data Vault

Model is a detail oriented, historical tracking and uniquely linked set of

normalized tables that support one or more functional areas of business.”

Kimball is the most frequently used methodology as the

results can be seen quickly and can be developed iteratively (one mart at a

time). As the definition says “Data warehouse is copy of transaction data

specifically structured for query analysis”, the Data warehouse/BI is

decomposed into manageable pieces by focusing on the organization’s core

business processes, along with the associated conformed dimensions. Hence, I

recommend Leeds City Council to use Kimball’s Bottom up Data warehouse design

approach as it could be more beneficial in transport department to analyze the

traffic status and changes in quality, quantity of traffic throughout the

years. And it will also reduce time needed for reporting, lower data latency,

and deliver more detailed, analytically useful information.

1.4. Data Warehouse Design Methodology

Some of the major data warehouse design methodology

are discussed below

a.

OLTP

OLTP

is an acronym for On-line Transaction Processing which is characterized by a

large number of short transactions like INSERT, UPDATE DELETE. Hence, OLTP can

be defined as an information system type that prioritizes transaction

processing, dealing with operational data. In OLTP database, there is detailed

and current data, and schema used to store transactional databases is the

entity model (usually 3NF) (Datawarehouse4u.info,

2016). In OLTP database system data is frequently updated, requiring an

effective write operations support. The main purpose of OLTP data is to control

and run fundamental business tasks.

b.

OLAP

OLAP is an acronym

for On-line Analytical Processing which is a computer technology term referring

to systems focused on analyzing data in specific databases, and are

characterized for their analytical capabilities, addressing multi-dimensional

or one dimension data, processing all the information (DataOnFocus, 2016).

Thus, this kind of databases are based on READ operations, aggregating all

available information. This methodology is mostly applied in data warehouses

which helps in optimizing, reading and aggregation operations of its

multidimensional data model. The main purpose of OLAP data is to help with

planning, problem solving, and decision support.

Among

these two methodologies, i.e. OLTP and OLAP, OLAP will be sued for transport

department of Leeds City Council as it provides better performance for

accessing multidimensional data by the use of aggregations. Beside this, OLAP

system gives analytical capabilities (like forecasting and analyzing) that are

not in SQL or are more difficult to obtain. And OLAP’s multidimensional view of

data also provides the foundation for the analytical processing through

flexible information access. Thus, OLAP is more beneficial for the Leeds City Council

for analytical purposes of road quality, vehicle used on the roads, forecasting

vehicle growth per year, etc.

Possible

reports that could be generated using the OLAP data warehouse of transport

department of Leeds City Council are as follows

i.

Total number of vehicles used in particular year in

particular region.

ii.

Types of vehicles and their numbers.

iii.

Length of roads and the number of vehicles used on that

road on a particular year.

iv.

Local authority that takes care of roads and vehicles on

particular region on particular year, etc.

1.5. Schema Types

Schema, a collection of database objects, including

tables, views, indexes, and synonyms. It is a logical description of an entire

database. Like relational model for databases, Data warehouse uses different

schema. They are as follows:

a.

Star Schema: Star Schema is probably the simplest data warehouse

schema and resembles to the star with points radiating from center, so called

star schema. This architecture consists of large and normalized (3NF) fact

table at the center, and small de normalized, multiple dimension tables at the

points. A star query joins fact table and a number of dimension tables using a

primary key to foreign key join, but the dimension tables are not joined to

each other.

b.

Snowflake Schema: A snowflake schema is an advanced form of star schema

and is more complex than star schema. As the model resembles to a snowflake, it

got its name. Unlike star schema, dimension tables are normalized in snowflake

schema which reduces data redundancy. This normalization splits up the data

into additional tables and gives the snowflake architecture. Snow flake schema

are useful when there are low cardinality attributes in the dimensions.

c.

Fact Constellation

Schema: Fact Constellation

Schema, also called galaxy schema is another data warehouse architecture which

contains multiple fact tables and resembles to the constellation in the sky. The

main shortcoming of the fact constellation schema is a more complicated design

because many variants for particular kinds of aggregation must be considered

and selected (Datawarehouse4u.info, 2016). Moreover, dimension tables are still

large.

In order to develop data warehouse of transport

department of Leeds City Council, star schema is implemented as it allows to

quickly understand, navigate and analyze large multidimensional data sets.

Since this approach has fewer tables and clear join paths, query will run

faster than OLTP system. It also reduces the time required to load large

batches of data. Thus, the Star Schema will be more beneficial to analyze the

large amount of data in Leeds City Council.

1.6. Star Schema Design

Star Schema design for the transport department of

Leeds City Council is as follows

|

| Star Schema for Leeds City Transport |

1.7. Data Dictionary

A.

Dimension Tables

a.

Dim_time

S.N.

|

Field Name

|

Data Type

|

Constraints

|

Description

|

1.

|

Time_id

|

Integer

|

Primary Key

|

Unique Year ID

|

2.

|

year

|

Date

|

Not Null

|

Full Date

|

b.

Dim_region

S.N.

|

Field Name

|

Data Type

|

Constraints

|

Description

|

1.

|

region_id

|

Integer

|

Primary Key

|

Unique Region ID

|

2.

|

Region_name

|

Varchar

|

Not Null

|

Full name of the region

|

c.

Dim_local_authority

S.N.

|

Field Name

|

Data Type

|

Constraints

|

Description

|

1.

|

Local_authority_id

|

Integer

|

Primary Key

|

Unique Local Authority ID

|

2.

|

Loca_authority_name

|

Varchar

|

Not Null

|

Full name of local authority

|

d.

Dim_road

S.N.

|

Field Name

|

Data Type

|

Constraints

|

Description

|

1.

|

road_id

|

Integer

|

Primary Key

|

Unique Road ID

|

2.

|

Road_name

|

varchar

|

Not Null

|

Full name of road

|

3.

|

Start_junction

|

varchar

|

Name of Start Junction of the road

|

|

4.

|

End_junction

|

varchar

|

Name of End Junction of the road

|

|

5.

|

Link_length

|

float

|

Length of the road

|

e.

Dim_road_category

S.N.

|

Field Name

|

Data Type

|

Constraints

|

Description

|

1.

|

Road_category_id

|

Integer

|

Primary Key

|

Unique road category ID

|

2.

|

Road_category

|

Varchar

|

Not Null

|

Full name of Road Category

|

f.

Dim_vehicles

S.N.

|

Field Name

|

Data Type

|

Constraints

|

Description

|

1.

|

vehicle_id

|

Integer

|

Primary Key

|

Unique Local Authority ID

|

2.

|

Pedal_cycle

|

Varchar

|

Not Null

|

Number of Pedal Cycles

|

3.

|

Motorcycle

|

Integer

|

Number of Motorcycles

|

|

4.

|

Car_taxis

|

Integer

|

Number of Car/Taxis

|

|

5.

|

Bus_coaches

|

Integer

|

Number of Bus/Coaches

|

B.

Fact Table

Fact_transport

S.N.

|

Field Name

|

Data Type

|

Constraints

|

Description

|

1.

|

Id

|

Integer

|

Primary Key

|

Unique Id for the table

|

2.

|

time_id

|

Integer

|

Not Null, Foreign Key

|

Unique Time ID

|

3.

|

Region_id

|

Integer

|

Not Null, Foreign Key

|

Unique Region ID

|

4.

|

Local_authority_id

|

Integer

|

Not Null, Foreign Key

|

Unique Local Authority ID

|

5.

|

Road_id

|

Integer

|

Not Null, Foreign Key

|

Unique Road ID

|

6.

|

Road_category_id

|

Integer

|

Not Null, Foreign Key

|

Unique Road Category ID

|

7.

|

Vehicle_id

|

Integer

|

Not Null, Foreign Key

|

Unique Vehicle ID

|

8.

|

Total_vehicle

|

Integer

|

Not Null, Surrogate Key

|

Total number of vehicles

|

1.8. Granularity of Data

Granularity of data in the data warehouse refers to

the level of detail available in the data elements and is concerned with the

level of summarization of the data elements. The more detail data that is

available, the lower the level of granularity. Conversely, the lower the level

of detail, the higher the level of granularity (or level of summarization of

the data elements).

Granularity is an important aspect of data warehouse

as it (granular data) can be summarized, aggregated, broken into many different

subsets and so forth. Granularity also offers the opportunity for trade-off

between important issues in data modeling like, performance versus volume of

data (and the related cost of storing that data), the ability to access data at

a very detailed level versus performance and the cost of storing and accessing

large volumes of data (Ballard et al.,

1998).

1.9. ETL Script Design

ETL is an acronym for Extraction, Transformation and

Loading. It is a process to get data out of the source and load it into the

data warehouse. Simply, it is a process of copying data from one database to

other. Here, data is extracted from an OLTP database, transformed to match the

data warehouse schema and loaded into the OLAP data warehouse database (Priya

and Sultana A., 2016). Using an ETL tool data can be extracted from flat files

like spreadsheets and CSV files and load it into an OLAP data warehouse for

data analysis and reporting.

The ETL script to design fact and dimension table for

the data warehouse of transport department of Leeds City Council are as follows

QSEE generated scripts of the Star Schema developed is

as follows

--------------------------------------------------------------

-- Database creation Script

-- Auto-Generated by QSEE-SuperLite (c)

2001-2004 QSEE-Technologies Ltd.

-- Verbose generation: ON

-- note: spaces within table/column

names have been replaced by underscores (_)

-- Target DB: SQL2

-- Entity Model :Entity Relationship

Diagram

-- To drop the tables generated by this

script run -

--

'E:\The British College\Advance

Database\Assignments\project\final_leeds_transport_drop.sql'

--------------------------------------------------------------

--------------------------------------------------------------

-- Table Creation --

-- Each entity on the model is

represented by a table that needs to be created within the Database.

-- Within SQL new tables are created

using the CREATE TABLE command.

-- When a table is created its name and

its attributes are defined.

-- The values of which are derived from

those specified on the model.

-- Certain constraints are sometimes

also specified, such as identification of primary keys.

-- Create a Database table to represent

the "dim_year" entity.

CREATE TABLE dim_time(

time_id INTEGER NOT NULL,

year DATE NOT NULL,

-- Specify the PRIMARY KEY constraint for table

"dim_year".

-- This indicates which attribute(s) uniquely identify each

row of data.

CONSTRAINT pk_dim_time

PRIMARY KEY (time_id)

);

-- Create a Database table to represent

the "dim_region" entity.

CREATE TABLE dim_region(

region_id INTEGER NOT NULL,

region_name VARCHAR(100) NOT NULL,

-- Specify the PRIMARY KEY constraint for table

"dim_region".

-- This indicates which attribute(s) uniquely identify each

row of data.

CONSTRAINT pk_dim_region

PRIMARY KEY (region_id)

);

-- Create a Database table to represent

the "dim_local_authority" entity.

CREATE TABLE

dim_local_authority(

local_authority_id INTEGER NOT NULL,

local_authority_name VARCHAR(100) NOT NULL,

-- Specify the PRIMARY KEY constraint for table

"dim_local_authority".

-- This indicates which attribute(s) uniquely identify each

row of data.

CONSTRAINT pk_dim_local_authority

PRIMARY KEY (local_authority_id)

);

-- Create a Database table to represent

the "dim_road" entity.

CREATE TABLE dim_road(

road_id INTEGER NOT NULL,

road_name VARCHAR(100) NOT NULL,

start_junction VARCHAR(100),

end_junction VARCHAR(100),

link_length DECIMAL(8,2),

-- Specify the PRIMARY KEY constraint for table

"dim_road".

-- This indicates which attribute(s) uniquely identify each

row of data.

CONSTRAINT pk_dim_road

PRIMARY KEY (road_id)

);

-- Create a Database table to represent

the "dim_road_category" entity.

CREATE TABLE

dim_road_category(

road_category_id INTEGER NOT NULL,

road_category VARCHAR(100) NOT NULL,

-- Specify the PRIMARY KEY constraint for table

"dim_road_category".

-- This indicates which attribute(s) uniquely identify each

row of data.

CONSTRAINT pk_dim_road_category

PRIMARY KEY (road_category_id)

);

-- Create a Database table to represent

the "dim_vehicles" entity.

CREATE TABLE dim_vehicles(

vehicle_id INTEGER NOT NULL,

pedal_cycle INTEGER NOT NULL,

motorcycle INTEGER NOT NULL,

car_taxis INTEGER NOT NULL,

buses_coaches INTEGER NOT NULL,

-- Specify the PRIMARY KEY constraint for table

"dim_vehicles".

-- This indicates which attribute(s) uniquely identify each

row of data.

CONSTRAINT pk_dim_vehicles

PRIMARY KEY (vehicle_id)

);

-- Create a Database table to represent

the "fact_transport" entity.

CREATE TABLE

fact_transport(

fact_transport_id INTEGER NOT NULL,

time_id INTEGER NOT NULL,

region_id INTEGER NOT NULL,

local_authority_id INTEGER NOT NULL,

road_id INTEGER NOT NULL,

road_category_id INTEGER NOT NULL,

vehicle_id INTEGER NOT NULL,

total_vehicle INTEGER,

fk1_year_id INTEGER NOT NULL,

fk2_region_id INTEGER NOT NULL,

fk3_local_authority_id INTEGER NOT NULL,

fk4_road_id INTEGER NOT NULL,

fk5_road_category_id INTEGER NOT NULL,

fk6_vehicle_id INTEGER NOT NULL,

-- Specify the PRIMARY KEY constraint for table

"fact_transport".

-- This indicates which attribute(s) uniquely identify each

row of data.

CONSTRAINT pk_fact_transport

PRIMARY KEY (fact_transport_id)

);

--------------------------------------------------------------

-- Alter Tables to add fk constraints

--

-- Now all the tables have been created

the ALTER TABLE command is used to define some additional

-- constraints. These typically constrain values of foreign

keys to be associated in some way

-- with the primary keys of related

tables. Foreign key constraints can

actually be specified

-- when each table is created, but

doing so can lead to dependency problems within the script

-- i.e. tables may be referenced before

they have been created. This method is

therefore safer.

-- Alter table to add new constraints

required to implement the "fk_year" relationship

-- This constraint ensures that the

foreign key of table "fact_transport"

-- correctly references the primary key

of table "dim_year"

ALTER TABLE fact_transport ADD CONSTRAINT

fk1_fact_transport_to_dim_time FOREIGN KEY(fk1_time_id) REFERENCES dim_time(time_id) ON DELETE RESTRICT ON UPDATE RESTRICT;

-- Alter table to add new constraints required

to implement the "fk_region" relationship

-- This constraint ensures that the

foreign key of table "fact_transport"

-- correctly references the primary key

of table "dim_region"

ALTER TABLE fact_transport ADD CONSTRAINT

fk2_fact_transport_to_dim_region FOREIGN KEY(fk2_region_id) REFERENCES

dim_region(region_id)

ON DELETE RESTRICT ON UPDATE RESTRICT;

-- Alter table to add new constraints

required to implement the "fk_local_authority" relationship

-- This constraint ensures that the

foreign key of table "fact_transport"

-- correctly references the primary key

of table "dim_local_authority"

ALTER TABLE fact_transport ADD CONSTRAINT

fk3_fact_transport_to_dim_local_authority FOREIGN

KEY(fk3_local_authority_id) REFERENCES

dim_local_authority(local_authority_id) ON DELETE RESTRICT ON UPDATE RESTRICT;

-- Alter table to add new constraints

required to implement the "fk_road" relationship

-- This constraint ensures that the

foreign key of table "fact_transport"

-- correctly references the primary key

of table "dim_road"

ALTER TABLE fact_transport ADD CONSTRAINT

fk4_fact_transport_to_dim_road FOREIGN KEY(fk4_road_id) REFERENCES dim_road(road_id) ON DELETE RESTRICT ON UPDATE RESTRICT;

-- Alter table to add new constraints

required to implement the "fk_road_category" relationship

-- This constraint ensures that the

foreign key of table "fact_transport"

-- correctly references the primary key

of table "dim_road_category"

ALTER TABLE fact_transport ADD CONSTRAINT

fk5_fact_transport_to_dim_road_category FOREIGN

KEY(fk5_road_category_id) REFERENCES

dim_road_category(road_category_id) ON DELETE RESTRICT ON UPDATE RESTRICT;

-- Alter table to add new constraints

required to implement the "fk_vehicles" relationship

-- This constraint ensures that the

foreign key of table "fact_transport"

-- correctly references the primary key

of table "dim_vehicles"

ALTER TABLE fact_transport ADD CONSTRAINT

fk6_fact_transport_to_dim_vehicles FOREIGN KEY(fk6_vehicle_id) REFERENCES dim_vehicles(vehicle_id) ON DELETE RESTRICT ON UPDATE RESTRICT;

--------------------------------------------------------------

-- End of DDL file auto-generation

--------------------------------------------------------------

1.10. Decisions on Data Maintenance and Slowly Changing Dimensions

Data Maintenance

Consider

How often data will be uploaded (updated) in the data warehouse.

Maintaining

the data in data warehouse means keeping the data very well organized, updating

the warehouse to fit constantly changing business requirements and reviewing

all updates. Data can be maintained on the data warehouse using following steps

i.

Regularly updating the data warehouse by

removing unnecessary data and duplicate entries, cleaning up redundant data and

inconsistent relationships.

ii.

Keeping the web log lean and deleting

the summarized data regularly, as over-congested logs will decrease performance

speed.

iii.

Evaluating whether to expand the

warehouse every time a new profile properties is added, as every database

object can only contain a certain number of properties.

iv.

Transforming data by combining multiple

fields into one field; breaking down data fields into separate fields based on

year, month and day; mapping related data sets into a single representation;

and applying surrogate keys to dimension table records.

v.

Keeping users of the warehouse

well-trained on how to create queries, access database structures and handle

unexpected contingencies.

Slowly Changing Dimensions (SCD)

According to Kimball, Slowly Changing Dimension is the

name of data management process that loads both historical and current data

over time into dimension table in data warehouse. In order to adopt SCD, the

data needs to change slowly on an irregular, random and variable schedule.

There are six different types of SCD methodologies,

namely Type 0, Type 1, Type 2, Type 3, Type 4 and Type 6. Among them, first

three are commonly practiced. All of this types of Slowly Changing Dimensions

are discussed below:

i.

Type 0: In this type of SCD, changes in data are not updated,

so the original values of the record remain in the dimensions that was

initially created.

ii.

Type 1: This type of SCD is easy to maintain and it overwrites

old records with new records. No historical observation are kept in this data

warehouse.

iii.

Type 2: In this type of SCD, the records are updated by inserting

new observation while preserving the observations. In this type of SCD,

unlimited historical observations can be preserved with this type.

iv.

Type 3: This SCD type updates the record by creating new

dimensions to the table structure and preserves the limited history (only the

previous record could be preserved).

v.

Type 4: Type 4 SCD updates the record in the current data table

and preserves all or some historical observations in an archive table.

vi.

Type 6: Type 6 methodology is a hybrid methodology of Type 1,

Type 2 and Type 3 (1+2+3=6). It integrates Type 3 SCD by creating additional

dimensions to the table structure while preserving historical observation.

Similarly it incorporates Type 2 and Type 1 by creating new dimensions to

include the different version number and by updating the latest records in the

observation respectively (Lihui, Choy and

L.F. Cheong, 2013).

1.11. Data Warehouse Interface Suggestions

In order to design data warehouse application for the

transport department of the Leeds City Council, Star Schema and OLAP design

approach and methodology has been chosen. Using Star Schema, different

dimension tables and fact tables were designed as required. In order to access

and maintain data warehouse by the non-technical person, a simple, responsive

User Interface will be designed using Oracle Apex.

2. Forward Engineering of Star Schema Using QSEE

2.1. Star Schema Set Up

Star Schema of data warehouse for transport department

of Leeds City Council is as follows:

|

| Star Schema for Leeds City Transport |

The

scripts to create the above fact and dimension tables for transport department

of Leeds City Council are as follows

i.

Dim_time

DROP table

dim_time;

create table

dim_time(

time_id NUMBER(10) NOT NULL,

year date

NOT NULL

);

ALTER table

dim_time add constraint

pk_timeid primary key (time_id);

create sequence

seq_timeid

start with

1

increment by

1

nocache

nocycle;

ii.

Dim_region

DROP table

dim_region;

create table

dim_region(

region_id NUMBER(10) NOT NULL,

region_name VARCHAR2(100) NOT NULL

);

ALTER TABLE

dim_region add constraint

pk_regionid primary key

(region_id);

create sequence

seq_regionid

start with

1

increment by

1

nocache

nocycle;

iii.

Dim_local_authority

DROP TABLE

dim_local_authority;

CREATE TABLE

dim_local_authority(

local_authority_id NUMBER(10) NOT NULL,

local_authority_name VARCHAR2(100) NOT NULL

);

ALTER TABLE

dim_local_authority ADD CONSTRAINT pk_localauthorityid PRIMARY

KEY (local_authority_id);

create sequence

seq_localauthorityid

start with

1

increment by

1

nocache

nocycle;

iv.

Dim_vehicles

DROP TABLE

dim_vehicles;

CREATE TABLE

dim_vehicles(

vehicle_id NUMBER(10) NOT NULL,

pedal_cycle NUMBER(10),

motorcycle NUMBER(10),

car_taxis NUMBER(10),

buses_coaches NUMBER(10)

);

ALTER TABLE

dim_vehicles ADD CONSTRAINT

pk_vehicleid PRIMARY KEY

(vehicle_id);

create sequence

seq_vehicleid

start with

1

increment by

1

nocache

nocycle;

v.

Dim_road

DROP TABLE

dim_road;

CREATE TABLE

dim_road(

road_id NUMBER(10) NOT NULL,

road_name VARCHAR2(150) NOT NULL,

start_junction VARCHAR2(150),

end_junction VARCHAR2(150),

link_length DECIMAL

);

ALTER TABLE

dim_road ADD CONSTRAINT

pk_roadid PRIMARY KEY (road_id);

create sequence

seq_roadid

start with

1

increment by

1

nocache

nocycle;

vi.

Dim_road_category

DROP TABLE

road_category;

CREATE TABLE

road_category(

road_category_id NUMBER(10) NOT NULL,

road_category VARCHAR2(100) NOT NULL

);

ALTER TABLE

road_category ADD CONSTRAINT

pk_roadcategoryid PRIMARY KEY (road_category_id);

create sequence

seq_roadcategoryid

start with

1

increment by

1

nocache

nocycle;

vii.

Fact_transport

DROP TABLE

fact_transport;

CREATE TABLE

fact_transport(

id NUMBER(10)

NOT NULL,

time_id NUMBER(10) NOT NULL,

region_id NUMBER(10) NOT NULL,

local_authority_id NUMBER(10) NOT NULL,

road_id NUMBER(10) NOT NULL,

road_category_id NUMBER(10) NOT NULL,

vehicle_id NUMBER(10) NOT NULL

);

ALTER TABLE

fact_transport ADD CONSTRAINT

pk_id PRIMARY KEY (id);

ALTER TABLE

fact_transport ADD CONSTRAINT

fk_time_id FOREIGN KEY

(time_id)

REFERENCES dim_time (time_id);

ALTER TABLE

fact_transport ADD CONSTRAINT

fk_region_id FOREIGN KEY

(region_id)

REFERENCES dim_region (region_id);

ALTER TABLE

fact_transport ADD CONSTRAINT

fk_localauthority_id FOREIGN KEY (local_authority_id) REFERENCES

dim_local_authority (local_authority_id);

ALTER TABLE

fact_transport ADD CONSTRAINT

fk_road_id FOREIGN KEY

(road_id)

REFERENCES dim_road (road_id);

ALTER TABLE

fact_transport ADD CONSTRAINT

fk_road_category_id FOREIGN KEY (road_category_id) REFERENCES

dim_road_category (road_category_id);

ALTER TABLE

fact_transport ADD CONSTRAINT

fk_vehicle_id FOREIGN KEY

(vehicle_id)

REFERENCES dim_vehicles

(vehicle_id);

create sequence

seq_id

start with

1

increment by

1

nocache

nocycle;

After running the queries above, table created are

shown in the following image

|

| Dim and Fact Tables Created after Running Queries |

2.2. Data Gathering

A.

Leeds City Council’s

transport data in csv format

|

| Leeds City Transport Data in CSV format |

A.

Uploading the csv

data into staging area using Apex tools

In order to

collect data needed for data warehouse of transport department of Leeds City

Council, the data in spreadsheet should be uploaded to staging area using Apex

tools. Steps to upload the data is as follows:

1. Go to Oracle Home Page

|

| Oracle Home Page |

2. Then Click on SQL Workshop, following dashboard will open

|

| Go to SQL Workshop |

3. Now go to utilities tab

|

| Go to Utilities Tab |

4. And then Click on Data Workshop

|

| Go to Data Workshop |

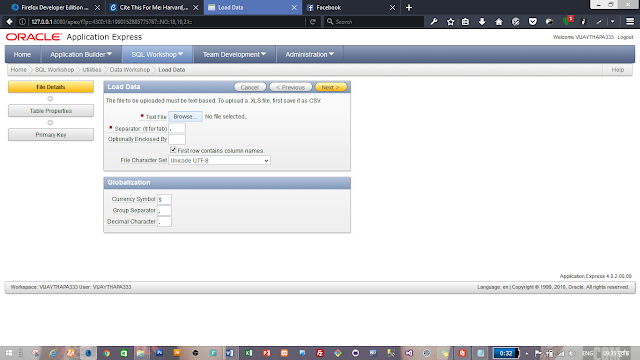

5. Now Select the spreadsheet data. Load data tab will open.

6. Now check on new table. Also check on Upload file to upload the spreadsheet data then click on next.

|

| Check on New Table |

7. Now click on browse button and select the spreadsheet file to upload and click next.

|

| Click on Browse Button and Select Spreadsheet File |

8. Now give the table name and click on next button.

|

| Give the Table Name and Click on Next |

9. Finally click on Load data button. And the data will be uploaded to the staging area.

|

| Click on Load Data Button |

10. Final output after the data is successfully uploaded to staging area from csv file.

|

| Final Output after data is loaded |

References

Ballard, C., Herreman, D., Schau, D., Bell, R., Kim, E. and Valencic, A. (1998). IBML Data Modeling Techniques for Data Warehousing. 1st ed. [ebook] California: International Bussiness Machines Corporation. Available at: http://www.redbooks.ibm.com/redbooks/pdfs/sg242238.pdf [Accessed 16 Sep. 2016].Bellachia, A. (2016). Data Warehousing and OLAP Technology. 1st ed. [ebook] Available at: https://www.seas.gwu.edu/~bell/csci243/lectures/data_warehousing.pdf [Accessed 14 Sep. 2016].

DataOnFocus. (2016). OLTP vs OLAP: Definitions and Comparison - DataOnFocus. [online] Available at: http://www.dataonfocus.com/oltp-vs-olap/ [Accessed 16 Sep. 2016].

Datawarehouse4u.info. (2016). Data Warehouse Schema Architecture - fact constellation schema. [online] Available at: http://datawarehouse4u.info/Data-warehouse-schema-architecture-fact-constellation-schema.html [Accessed 15 Sep. 2016].

Datawarehouse4u.info. (2016). OLTP vs. OLAP. [online] Available at: http://datawarehouse4u.info/OLTP-vs-OLAP.html [Accessed 16 Sep. 2016].

Docs.oracle.com. (2016). Introduction to Data Warehousing Concepts. [online] Available at: https://docs.oracle.com/database/121/DWHSG/concept.htm#DWHSG001 [Accessed 14 Sep. 2016].

Lapluea, T. (2016). “Why Enterprise Data Warehouse?”: Data Warehousing Development at Offco Ltd.. [online] Academia.edu. Available at: http://www.academia.edu/208553/_Why_Enterprise_Data_Warehouse_Data_Warehousing_Development_at_Offco_Ltd [Accessed 14 Sep. 2016].

Lihui, W., Choy, M. and L.F. Cheong, M. (2013). Implementation of Slowly Changing Dimension to Data Warehouse to Manage Marketing Campaigns in Banks. 1st ed. [ebook] Singapore. Available at: http://support.sas.com/resources/papers/proceedings13/239-2013.pdf [Accessed 16 Sep. 2016].

MacLauchlan, S. (2013). Data Warehousing Methodologies - Data Blueprint. [online] Data Blueprint. Available at: http://www.datablueprint.com/data-warehousing-methodologies/ [Accessed 14 Sep. 2016].

MacLauchlan, S. (2013). Data Warehousing Methodologies - Data Blueprint. [online] Data Blueprint. Available at: http://www.datablueprint.com/data-warehousing-methodologies/ [Accessed 15 Sep. 2016].

Note: I don't encourage any student to copy any assignments. And in case of plagiarism, student will be responsible.

SUBSCRIBE for more assignments like this.

![Advertisement [ad]](https://blogger.googleusercontent.com/img/a/AVvXsEgVAiCox6-vLXsNZas8ks-nfos0PgdnL4yClmlqOkl92t7zGdYYiLBy9AHMZFxBYe06DVmN6JGQ9S0P3iClXk8l43FIQPDyAcx_uMmV0bN9JlKjTzOAi7YjmQo6cuvHgkEO76L-hcqV-TWE29v93eeFby8MOAOuJ8DcilHTPpfP8aKg8TG9uYCDaMxcr8H1=s600)

Comments