Table of Contents

TASK 1: Implementation

a. ETL (Extract, Transact, Load) Script

The ETL Process

b. DW reports

Report Showing Total Number of Motorcycles in Different Year

Report Showing The Total Number of Vehicles in Different Road Category

TASK 1: Implementation

a. ETL (Extract, Transform, Load) Script:

|

| Star Schema of Leeds Transport Department |

The ETL Process

ETL is an acronym for Extract, Transform and Load in Data warehouse technologies which is responsible for pulling data out of the source systems and placing it into a data warehouse. Simply, it is the process of copying data from one database to another (Priya and Sulatana A., 2016). Here, Data is extracted from an OLTP database, transformed to match the data warehouse schema and loaded into the data warehouse database. These processes are further discussed bellows

1. Extract

In this step, the data from different sources like OLTP databases, RDBMS, etc. are extracted and imported into the staging area and finally into the temporary dimension and fact tables. These data into these tables are ready for filtering or data quality check into another step i.e. Transform. In order to extract the data of transports department of Leeds City Council, the data from spreadsheet is extracted to the Staging area as follows:

|

| Extraction of Data from Spreadsheet to the Staging Area |

After the data is extracted they are inserted into various dimensions and fact tables for further processes. The steps to create and insert data into these tables are discussed below

i. tmp_dim_time_table

Query to create tmp_dim_time_table is as follows

DROP table tmp_dim_time;

create table tmp_dim_time(

time_id NUMBER(10) NOT NULL,

year VARCHAR2(100) NOT NULL

);

ALTER table tmp_dim_time add constraint pk_timeid primary key (time_id);

DROP SEQUENCE seq_time_id;

create sequence seq_time_id

start with 1

increment by 1

nocache

nocycle;

create table tmp_dim_time(

time_id NUMBER(10) NOT NULL,

year VARCHAR2(100) NOT NULL

);

ALTER table tmp_dim_time add constraint pk_timeid primary key (time_id);

DROP SEQUENCE seq_time_id;

create sequence seq_time_id

start with 1

increment by 1

nocache

nocycle;

|

| Proof of Trigger Craeted for DimTime Table |

The Query and evidence of triggers created from tmp_dim_time_table is as follows

|

| Proof of DIm Time Table Creation and Data Insertion |

ii. tmp_dim_region

Temporary dim_region table has been created to load data from staging area to check for further possible errors.

DROP table dim_region;

create table dim_region(

region_id NUMBER(10) NOT NULL,

region_name VARCHAR2(100) NOT

NULL

);

ALTER TABLE dim_region add constraint pk_regionid primary

key (region_id);

create sequence seq_regionid

start

with 1

increment

by 1

nocache

nocycle; |

| Proof of Dim Region Table Created |

|

| Proof of Trigger Created for tmp dim region |

|

| Proof of Data Insertion in dim region table |

iii. tmp_dim_local_authority

Query to create tmp_dim_local_authority is as follows

DROP TABLE tmp_dim_local_authority;

CREATE TABLE tmp_dim_local_authority(

local_authority_id NUMBER(10) NOT NULL,

local_authority_name VARCHAR2(100) NOT NULL

);

ALTER TABLE tmp_dim_local_authority ADD CONSTRAINT pk_localauthorityid PRIMARY KEY (local_authority_id);

create sequence seq_localauthority_id

start with 1

increment by 1

nocache

nocycle;

CREATE TABLE tmp_dim_local_authority(

local_authority_id NUMBER(10) NOT NULL,

local_authority_name VARCHAR2(100) NOT NULL

);

ALTER TABLE tmp_dim_local_authority ADD CONSTRAINT pk_localauthorityid PRIMARY KEY (local_authority_id);

create sequence seq_localauthority_id

start with 1

increment by 1

nocache

nocycle;

|

| Proof of Trigger Created for DIm Local Authority |

The evidence and query for data insertion into local authority table is as follows

|

| Proof of Data Insertion in Dim Local Authority |

iv. tmp_dim_vehicles

Query to create tmp_dim_vehicles is as follows

DROP TABLE tmp_dim_vehicles;

CREATE TABLE tmp_dim_vehicles(

vehicle_id NUMBER(10) NOT NULL,

pedal_cycle NUMBER(10),

motorcycle NUMBER(10),

car_taxis NUMBER(10),

buses_coaches NUMBER(10)

);

ALTER TABLE tmp_dim_vehicles ADD CONSTRAINT pk_vehicleid PRIMARY KEY (vehicle_id);

DROP SEQUENCE seq_vehicle_id;

create sequence seq_vehicle_id

start with 1

increment by 1

nocache

nocycle;

CREATE TABLE tmp_dim_vehicles(

vehicle_id NUMBER(10) NOT NULL,

pedal_cycle NUMBER(10),

motorcycle NUMBER(10),

car_taxis NUMBER(10),

buses_coaches NUMBER(10)

);

ALTER TABLE tmp_dim_vehicles ADD CONSTRAINT pk_vehicleid PRIMARY KEY (vehicle_id);

DROP SEQUENCE seq_vehicle_id;

create sequence seq_vehicle_id

start with 1

increment by 1

nocache

nocycle;

|

| Proof of Table Created for Dim Tmp Vehicle Table |

The evidence and query to insert data into tmp_dim_vehicle table is as follows

|

| Proof of Data Insertion in dim tmp vehicle |

v. tmp_dim_road_category

Query to Create tmp_dim_road_category is as follows

DROP TABLE tmp_road_category;

CREATE TABLE tmp_road_category(

road_category_id NUMBER(10) NOT NULL,

road_category VARCHAR2(100) NOT NULL

);

ALTER TABLE tmp_road_category ADD CONSTRAINT pk_roadcategoryid PRIMARY KEY (road_category_id);

create sequence seq_roadcategory_id

start with 1

increment by 1

nocache

nocycle;

CREATE TABLE tmp_road_category(

road_category_id NUMBER(10) NOT NULL,

road_category VARCHAR2(100) NOT NULL

);

ALTER TABLE tmp_road_category ADD CONSTRAINT pk_roadcategoryid PRIMARY KEY (road_category_id);

create sequence seq_roadcategory_id

start with 1

increment by 1

nocache

nocycle;

|

| Proof of Data Insertion in tmp_road_category |

vi. tmp_dim_road

Query to create tmp_dim_road is as follows

DROP TABLE tmp_dim_road;

CREATE TABLE tmp_dim_road(

road_id NUMBER(10) NOT NULL,

road_name VARCHAR2(150) NOT NULL,

start_junction VARCHAR2(150),

end_junction VARCHAR2(150),

link_length DECIMAL

);

ALTER TABLE tmp_dim_road ADD CONSTRAINT pk_roadid PRIMARY KEY (road_id);

DROP SEQUENCE seq_road_id;

create sequence seq_road_id

start with 1

increment by 1

nocache

nocycle;

CREATE TABLE tmp_dim_road(

road_id NUMBER(10) NOT NULL,

road_name VARCHAR2(150) NOT NULL,

start_junction VARCHAR2(150),

end_junction VARCHAR2(150),

link_length DECIMAL

);

ALTER TABLE tmp_dim_road ADD CONSTRAINT pk_roadid PRIMARY KEY (road_id);

DROP SEQUENCE seq_road_id;

create sequence seq_road_id

start with 1

increment by 1

nocache

nocycle;

|

| Proof of Trigger Created for dim road |

|

| Proof of Data Insertion in Dim Road Table |

2. Transform

After the data is loaded into the temporary tables (dimension and fact) the data are cleaned and filtered in this step to load the data into the final data table in the data warehouses. At first, errors where checked in different temporary dimension tables, then the errors from those table were inserted into the error table if found. In order to check for the possible errors, Error Table is created as follows:

|

| Error Table Created |

After the error table is created, possible error is checked in various tables as follows and the errors found are inserted into the error table as follows.

Error in Dim_Local_Authority

Local Authority with "NULL" Local Authority Name was inserted

|

| Error in tmp_dim_local_authority table found |

Inserting Error in the Error Table

|

| Inserting the error found into the error table |

Removing the Error Value from the tm_dim_local_authority

|

| error removed from the tmp_local_authority table for cleaning the data. |

Error in Road Table

|

| Error in road table |

Error from Road Table inserted to the Error Table

|

| Errors inserted into the error table |

Displaying Error Inserted into the Error Table

|

| Displaying Error Inserted into Error Table |

3. Load

After the errors are tracked and inserted into the error table they are removed from the temporary table. Thus, the temporary table contains clean data. This data are transferred into the new clean dimensions and facts tables. For this, new dim and fact tables are created and clean data are inserted as follows:

i. dim_time

Creating Dim_time table to load clean, managed and organized data from temporary tmp_dim_time table. The code to create the clean dim_time table is as follows:

|

| Proof of creating dim_time table |

After the final dim_time table is created, clean data from tmp_dim_time table is loaded as follows:

|

| Proof of Trigger created for Dim Time Table |

|

| Data inserted into the dim_time table |

ii. dim_region

In order to load data from tmp_dim_region final dim_region table has been created as follows;

|

| Proof of creating dim_region table |

After creating the dim_region table, clean data from the tmp_dim_region table has been loaded to this table as follows:

|

| Proof of Dim Region Trigger Created |

|

| Data inserted into the dim_region table |

iii. dim_local_authority

In order to insert clean data into after removing the errors from the tmp_local_authority, a final dim_local_authority has been created as follows.

|

| Proof of creating dim_local_authority table |

|

| Proof of Trigger Created for dim_local_authority table |

|

| Clean Data Inserted into dim_local_authority from tmp_dim_local_authority |

iv. dim_vehicles

Finally, creating the dim_vehicles to load the clean data from the tmp_dim_vehicles

Here, then the clean data is loaded successfully to this table as follows:

Finally, creating the dim_vehicles to load the clean data from the tmp_dim_vehicles

|

| Proof of creating dim_vehicles table |

|

| Proof of Trigger Created for vehicles |

|

| Proof of Clean Data Insertion into Vehicles |

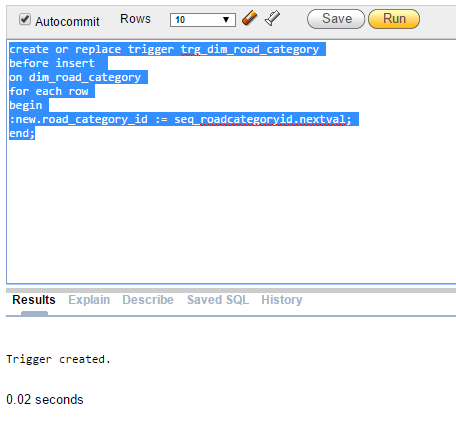

v. dim_road_category

Dim_road_category table has been created to insert the clean data from temporary table after removing the errors successfully.

Here, the data is finally inserted into this table from the temporary table as follows

Dim_road_category table has been created to insert the clean data from temporary table after removing the errors successfully.

|

| Proof of dim_road_category created Successfully |

|

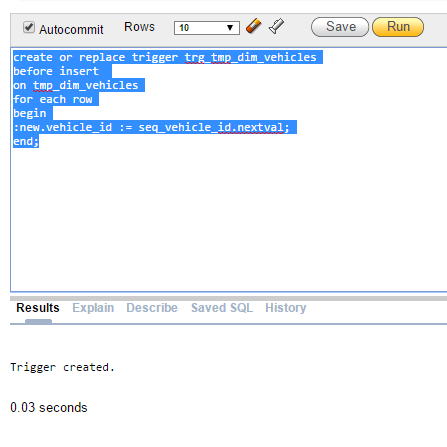

| Proof of Trigger Created for Road Category |

|

| DAta Successfully inserted into dim_road_category |

vi. dim_road

Dim_road table has been created to load the clean data from tmp_dim_road as follows:

Now. Loading the data into this table from the temporary table as follows

Dim_road table has been created to load the clean data from tmp_dim_road as follows:

|

| Proof of Dim Road Created Successfully |

|

| Proof of Trigger Created for Dim Road Successfully |

|

| Proof of Data Inserted Sucessfully into Dim_Road |

vii. fact_transport

Code to create Fact Transport Table is as follows

In order to visualize the data into informative and easy to understand forms like charts, graphs, line chart, etc. Oracle Apex is used here. Using SQL Query and Apex, such charts can be made dynamically. Thus, they are created for the transport department of the Leeds City Council. The reports created using Apex reporting tools are described as follows

The SQL and APEX reports in the figure above shows the total number of motorcycles in different time period i.e. Year. The figure shows that the number of motorcycles was maximum in 2005 and minimum in 2001. In order to create this informative bar graph, inner join is used among various dimension and fact table.

In order to represent the transport data of the Leeds City Council, SQL and Apex tools are used. The diagrams in the above figure shows total number of cars and taxis in different road category. The road category PM has the highest number of cars and taxis i.e. 42529 and the road category PR has the least number of cars and taxis i.e. 2378. The pie chart above is easy to visualize and any one can understand without any difficulty. Here, the Red Color indicates TR road category, Blue indicates PM road category, Green indicates TM road category, Yellow represents PU road category and Light Green represents PR road category.

Hence, the data from Transport department of Leeds City Department is transformed into the Data warehouse which is finally displayed into Graphs and charts Using BI tools like SQL query and APEX tool in this context.

Code to create Fact Transport Table is as follows

|

| Proof ofCreating Fact Transport Successfully |

|

| Proof of Data Insertion Into Fact Transport Table |

b. DW reports:

Create 2 Data Warehouse reports (using SQL or Apex reporting tools). Include code, screen shots and evidence of the code running successfully with results and a brief discussions of the findings.In order to visualize the data into informative and easy to understand forms like charts, graphs, line chart, etc. Oracle Apex is used here. Using SQL Query and Apex, such charts can be made dynamically. Thus, they are created for the transport department of the Leeds City Council. The reports created using Apex reporting tools are described as follows

Report showing total number of motorcycles in different Year

The report for showing the total numbers of motorcycles in different year has been generated using SQL Query as well as Apex reporting tool. The following SQL query shows the total number of motorcycles in different year. |

| SQL Showing Number Of Motorcycles in different Year |

|

| Report Showing the number of motorcycles in different year |

Report showing the total number of vehicles in different road category

The data from the transport department of Leeds City Council has been used to generate different reports that might be resourceful to discover the hidden patterns in the historical data. The reports were created using SQL query and APEX tools in Oracle. The reported generated are discussed below. The query written in the figure below displays the total number of vehicles in different road category. |

| SQL Showing Number of Vehicles in Different Roads |

|

| Total Number of Vehices in Different Roads |

Hence, the data from Transport department of Leeds City Department is transformed into the Data warehouse which is finally displayed into Graphs and charts Using BI tools like SQL query and APEX tool in this context.

TASK 2: Data Analysis/OLAP Mining Investigation

a) Upload part or all of the leeds_traffic spreadsheet into MS Excel. Create a pivot table ad produce some interesting (and appropriate) reports using the charts and visualizations functionality. You may include (or discuss) external data as well.

Pivot Table

In data processing, a pivot table is a data summarization tool found in data visualization programs like spreadsheets or business intelligence to show the data into 360 degree (dynamic) graphical view. Simply, pivot table is the data visualization tool which can aggregate information and show new perspective in a few clicks instead of analyzing countless spreadsheet records. Pivot Table can be described as the simplified version of more complete and complex Online Analytical Processing Concepts (OLAP). In Pivot Table, Components like rows, columns, data fields, and pages can be moved around which helps to expand, isolate, sum, and group the particular data in real time.

Why Pivot Table?

Creating neat, informative summaries out of huge lists of raw data is a common challenge. But using the pivot table, any amount of data can be easily managed and analyzed in one single go. And it can be used for various purposes like finding data patterns which helps in accurate data forecast, quick report creation in quick way, and helps in quick decision making as it allows users to easily analyze the data. Thus the benefits of using Pivot table can be summarized as

i. Sums up large amount of information in a small amount of space

ii. Helps-decision makers use data more efficiently

iii. Keeps data more organized

iv. Provides interactive data analysis

v. Can link to external data sources

vi. Can chart summarized data in the Pivot Table

Pivot Table for Leeds Traffic

In order to visualize, organize and analyze the data of Leeds traffic in simpler way following pivot tables are designed. Each of the tables describe the certain situations and are described below.

A. Vehicles in Different Year

|

| Vehicles in Different year |

As shown in the figure above, the bar diagram shows the total number different vehicles like Buses and Coaches, cars and Taxis, Motorcycles and Pedal Cycles is shown over the years which is easy to understand as well as describe. It shows that the total number of Car and Taxis is always huge than other vehicle types in every year. From this, it can be predicted that the number of Cars and Taxis will obviously be more than other vehicle types in upcoming years too.

B. Vehicles in 2004

|

| Vehicles in Different Roadin 2004 |

The bar graph in the above diagram shows the total number of different vehicle types in different road category but in same year (2004). As shown in the figure above, the number of Car and Taxis is more in both road types than other vehicle types. But the Car and Taxis in road category A6038 is far more than the number of Car and Taxis in the road category A6029. And the number of other vehicle types like Motorcycles, Pedal cycles and Buses & Coaches is almost similar in both road category.

C. Sum of Motorcycles in Different Road Category

|

| Sum of Motorcycles in Different Road Category |

The pie chart in the above diagram shows the number of motorcycles in different road category. It shows that the number of motorcycles is more in road categories PU, TM, PR, TR and PM respectively. Here, Grey color represents PU road category, Yellow represents TM road category, Blue represents TR road category, Light Blue represents PM road category and Red color represents PR road category.

b.Discuss one data mining technique with respect to the case study.

Data Mining

Introduction to Data Mining

Data Mining can be described as the process of finding anomalies, patterns and correlations within large data sets to predict future outcomes using sophisticated mathematical algorithms. And the information obtained after data mining can be used to increase revenues, cut costs, improve customer relationships, and reduce risks and many more, using broad range of techniques. Simply, it is the process of digging through data to discover hidden connections and predict future trends whose foundation comprises of three intertwined scientific disciplines i.e. Statistics (the numeri study of data relationships), Artificial Intelligence (human-like intelligence displayed by software and/or machines) and Machine Learning (Algorithms that can learn from data to make predictions). Data mining is also known as Knowledge Discovery in Data (KDD).

Some of the key properties of the Data Mining are as follows

i. Automatic discovery of patterns

ii. Prediction of likely outcomes

iii. Creation of actionable information

iv. Focus on large data sets and databases

Retailers, banks, manufacturers, telecommunications providers and insurers, among others, are using data mining to discover relationships among everything from pricing, promotions and demographics to how the economy, risk, competition and social media are affecting their business models, revenues, operations and customer relationships.

Why Data Mining?

In this modern world of technology, digital data is increasing rapidly i.e. the volume of data produced is doubling every two year. And more than 90 percent of the data collected in this digital universe is unstructured. And more data/information doesn’t necessarily mean more knowledge. Thus, to make these data more valuable or informational, the need for data mining aroused. Beside this, the craze for data mining is increasing as it allows to

i. Examine through all the chaotic and repetitive noise in data.

ii. Understand what is relevant and then make good use of that information to assess likely outcomes.

iii. Accelerate the pace of making informed decisions.

Advantages of Data Mining

Some of the major advantages of Data Mining on different fields are discussed below

i. Health Sectors

Data mining is being widely used in the field of Health and Safety nowadays, for analyzing, investigating and predicting the diseases in upcoming generation. In the field of human genetics, data mining can be used to find out how the changes in the individual’s DNA sequence affect the risk of developing common diseases such as cancer (Hassas, 2016).

ii. Law Enforcement

Data Mining can help government bodies to identify criminal suspects as well as detain these criminals by examining trends in location, crime type, habit, and other patterns of behaviors. Beside this, it also aids government agencies by digging and analyzing records of the financial transaction to build patterns that can detect money laundering or criminal activities (ZenTut, 2016).

iii. Marketing/Retailers

Data mining can assist direct marketers by providing them with useful and accurate trends about their customers’ purchasing behavior. And through the results of data mining, marketers will have an appropriate approach to selling profitable products to targeted customers.

iv. Finance/Banking

Data mining provides information about the loan information and credit reporting based on historical customer data which could be beneficial to predict the good and bad loans. In addition, data mining also helps banks to detect fraudulent credit card transactions to protect credit card’s owner.

v. Electrical power Engineering

Data mining techniques have been used for condition monitoring of high voltage electrical equipment to obtain valuable information on the insulation's health status of the equipment (Hassas, 2016)..

Disadvantages of Data Mining

Some of the major disadvantages of Data Mining are discussed below:

i. Privacy Issues

In this modern digital world of technology, information about every people who uses internet has been collected with different means directly or indirectly. And such information are used to predict our interests on products or any services. And there is a probable situation when a company is sold to others, which means the personal information about the customers are also being sold, which may result in leak of their personal information.

ii. Security Issues

As we discussed earlier, most of our information are collected in digital format and the information in any organization may be name, contact, birthday, payroll, social security number, etc. And in case hackers get access to such big data then they can create big problems like identity theft and credit card stolen. Same incident happened some years ago with big corporations like Ford Motor Credit Company and Sony, where hackers got access and stole big data.

iii. Misuse of Information

Data collected from data mining can be used by the people or organization with unethical intentions to take benefits from people who are vulnerable or discriminated against a group of people.

Beside the points discussed above, data mining technique is not 100% accurate. And if the decision is made depending on such data can cause serious damage.

Thus, the Data Mining has its own benefits on different sectors like marketing, finance, health, engineering, manufacturing, governments as well as individual. However it also can cause serious problems like privacy leak, identity theft, misuse of information, etc. if the information obtained from data mining are not addressed and resolved properly.

Data Mining Techniques

a. Association

Association is one of the well-known data mining technique as it is the most familiar and straightforward technique. Here, the relationships between co-occurring items is analyzed to predict the pattern and are often used to analyze sales transactions. For example, it might be noted that customers who buy bread at the department store often buy milk at the same time. And using the Association technique of data mining it might be found that 87% of the checkout sessions that include bread also include milk. When such pattern is identified, it can be used to suggest people to buy milk when they are buying bread from the department store. This is also known as relation technique which is used in market basket analysis to identify set of products that are often bought together. Market basket analysis.

Types of association rule are as follows

i. Multilevel association rule

ii. Multidimensional association rule

iii. Quantitative association rule

b. Classification

Classification is another classic data mining technique based on machine learning which is used to classify each item in a set of data into one of a predefined set of classes or groups (ZenTut, 2016). This approach frequently employs decision tree or neural network-based classification algorithms. Using this technique, software that can learn how to classify the data items into groups can be developed. An example of the implementation of this technique is an e-mail program that might attempt to classify an email as “legitimate” or a “spam” email.

The different types of classification models are as follows:

i. Classification by decision tree induction

ii. Bayesian Classification

iii. Neural Networks

iv. Support Vector Machines (SVM)

v. Classification Based on Associations

c. Clustering

In this technique of data mining, individual pieces of data can be grouped together to form a structure opinion. Here, Classes or groups are defined automatically on the basis of properties of the objects and puts object in each class, while in classification technique, objects are assigned into predefined classes or groups (ZenTut, 2016). Simply Clustering can be said as identification of similar classes and objects. By using clustering techniques we can further identify dense and sparse regions in object space and can discover overall distribution pattern and correlations among data attributes (M. Ramageri, 2016). For example, Customers can be categorized automatically on the basis of similar purchase behavior and same age group using clustering technique.

Some of the major types of clustering techniques are as follows:

i. Partitioning Methods

ii. Hierarchical Agglomerative (divisive) methods

iii. Density based methods

iv. Grid-based methods

v. Model-based methods

d. Prediction

It is another important data mining technique that discovers the relationships between independent variables and relationship between dependent and independent variables. In data mining, independent variables are attributes already known and response variables are what we want to predict For example, the prediction analysis technique can be used in the sale to predict profit for the future if we consider the sale is an independent variable, profit could be a dependent variable. Then based on the historical sale and profit data, we can draw a fitted regression curve that is used for profit prediction.

Some of the major types of these methods are as follows

i. Linear Regression

ii. Multivariate Linear Regression

iii. Nonlinear Regression

iv. Multivariate Nonlinear Regression

e. Sequential Patterns

It is one of the data mining technique that tends to discover or identify similar patterns, regular events or trends in transaction data over a business period. For example, the purchase history of the customer on different time of year can be used to data mining using this technique to recommend customers to buy it with better deals based on their purchasing frequency in the past.

Data Mining in Leeds City Council for Transportation Department

As we discussed about different data mining techniques in the earlier paragraphs, it can be said that, all of these techniques have their strengths as well as weaknesses and are used for different purposes. And on the basis of comparison done on different techniques it has been decided to use Sequential Patterns technique of data mining for Transport department of Leeds City Council. As discussed earlier, Sequential Pattern is a useful data mining technique in identifying trends, or regular occurrences of similar events. For example, Historical data of Customer’s behavior of buying certain product during certain period of year can be used or analyzed to predict and suggest the product that people might want in coming years. Similarly, this technique can be used for Transport department of Leeds Council to predict the total number of traffic during the different time of a day or a year which can be helpful to avoid unwanted traffic jams. Like, in the office time around 10 in the morning and in the evening might have huge traffics which might cause traffic jams and also in the festive season heavy traffic can cause problems. And analyzing such data from the past can be used to predict the hidden trends and suggest the solutions to the most probable problems in transport system.

Hence, different data mining techniques are discussed in the document. All of the techniques are used for Analytical purposes and are used to explore data in search of consistent patterns and/or systematic relationships between variables, and then to validate the findings by applying the detected patterns to new subsets of data. And the main purpose of data mining is prediction. In Leeds City Council, Sequential Pattern of Data Mining technique is used in order to find the hidden patterns from the historic data of the vehicles available in Leeds City Council. Thus, the graphs for Data Visualization has been developed using apex tools to that can be useful in data mining too.

References

Hassas, S. (2016). Data Mining. 1st ed. [ebook] Available at: http://www.csun.edu/~sh111280/Hassas.pdf [Accessed 15 Nov. 2016].

M. Ramageri, B. (2016). Data Mining Techniques and Applications. 1st ed. [ebook] Pune, Maharasthra Inida: Modern Institute of Information Technology and Research, Department of Computer Application. Available at: http://www.ijcse.com/docs/IJCSE10-01-04-51.pdf [Accessed 17 Nov. 2016].

Sas.com. (2016). What is data mining?. [online] Available at: http://www.sas.com/en_us/insights/analytics/data-mining.html [Accessed 15 Nov. 2016].

ZenTut. (2016). Advantages and Disadvantages of Data Mining. [online] Available at: http://www.zentut.com/data-mining/advantages-and-disadvantages-of-data-mining/ [Accessed 15 Nov. 2016].

Associationofbusinesstraining.org. (2016). What is a Pivot Table and what are the Benefits of Utilizing Them in Excel? : Business Article by Association of Business Training. [online] Available at: http://www.associationofbusinesstraining.org/articles/view.php?article_id=11022 [Accessed 17 Nov. 2016].

Priya, G. and Sulatana A., R. (2016). ETL Process in Data Warehouse. 1st ed. [ebook] SRM University. Available at: http://www.srmuniv.ac.in/sites/default/files/files/ETL.pdf [Accessed 18 Nov. 2016].

![Advertisement [ad]](https://blogger.googleusercontent.com/img/a/AVvXsEgVAiCox6-vLXsNZas8ks-nfos0PgdnL4yClmlqOkl92t7zGdYYiLBy9AHMZFxBYe06DVmN6JGQ9S0P3iClXk8l43FIQPDyAcx_uMmV0bN9JlKjTzOAi7YjmQo6cuvHgkEO76L-hcqV-TWE29v93eeFby8MOAOuJ8DcilHTPpfP8aKg8TG9uYCDaMxcr8H1=s600)

Comments